I’m Eglė, CFO at Moterra, and I’ve been working to understand AI from the ground up. In my previous posts, I mapped out the AI landscape and showed how the different layers work together. But there was still a fundamental question I needed to answer: How does AI actually learn?

In my first post, I mentioned something that still fascinates me: when I paste text into ChatGPT, it splits it into small pieces called tokens—words, word parts, or phrases—looks at what I’ve provided, then predicts the most likely next token. This happens again and again until it forms a complete response.

The critical point? It’s not actually thinking or understanding, just getting incredibly good at guessing what comes next based on patterns from massive amounts of text. This led to the logical next question: How did it develop this capability?

The Training Reality That Changed My Perspective

Here’s what I discovered: AI learning is the world’s most intensive pattern-recognition process.

Picture taking every book, article, website, and document ever written and feeding it to a system that does nothing but look for patterns. Not just obvious ones like “the word ‘bank’ often appears near ‘money'” but incredibly subtle relationships like “when someone writes about quarterly earnings, the next sentence is 43% more likely to contain words about growth or decline.”

The system processes billions of these examples, building an internal map of how language works, how ideas connect, how conversations flow. What makes this fascinating is the sheer scale involved—it’s genuinely difficult to comprehend the magnitude of data processing required.

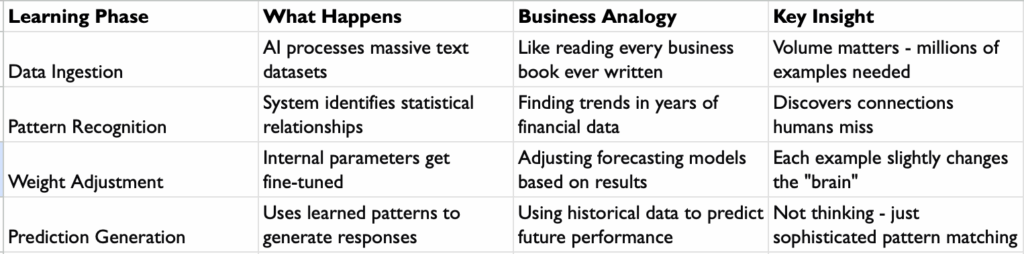

Breaking Down the Learning Process

To make sense of this, I created a framework that helped me visualize how AI learning actually works:

This table helps me to understand that AI learning follows a logical process, just at a scale that’s hard to grasp.

The Uncomfortable Truth About AI "Knowledge"

Here’s what really shifted my perspective: AI doesn’t know things the way we know things.

When I analyze Q3 revenue performance, I understand what that means for the business, the implications for stakeholders, the market context. When AI generates text about Q3 revenue, it’s matching patterns from thousands of similar discussions, but without any real comprehension of what revenue represents.

It’s sophisticated mimicry, not understanding. But here’s the business opportunity: if you can control which patterns the AI references, you can make its responses incredibly reliable within your specific domain.

The Business Implications

Understanding this changes everything about AI implementation:

- Data Quality Determines Everything: The patterns AI learns are only as good as the training data. Biases, errors, or gaps get baked into the system’s predictions.

- Context Shapes Results: Small changes in how you frame questions can yield dramatically different results because they trigger different pattern matches.

- The Hallucination Challenge: AI can confidently generate plausible-sounding but completely fabricated information because it’s learned the patterns of how facts are presented, not which facts are true.

This is why at Moterra, when we build internal knowledge assistants for your SharePoint documents, we constrain the AI to only reference your verified documents. No hallucinations, just reliable pattern-matching within your controlled data set.

My Takeaway

AI is an incredibly sophisticated pattern-matching tool, not a thinking entity.

This explains why it can write convincingly about topics it doesn’t understand, why prompt changes dramatically alter responses, and why it excels at pattern recognition but struggles with true reasoning.

Understanding this distinction is crucial for business implementation. It’s the difference between setting realistic expectations and making costly assumptions about AI capabilities.